How is software testing done at Geotab?

Is it magic or advanced tech? Sr. Software Developer Pavel Klimenkov provides an inside look at MyGeotab software testing.

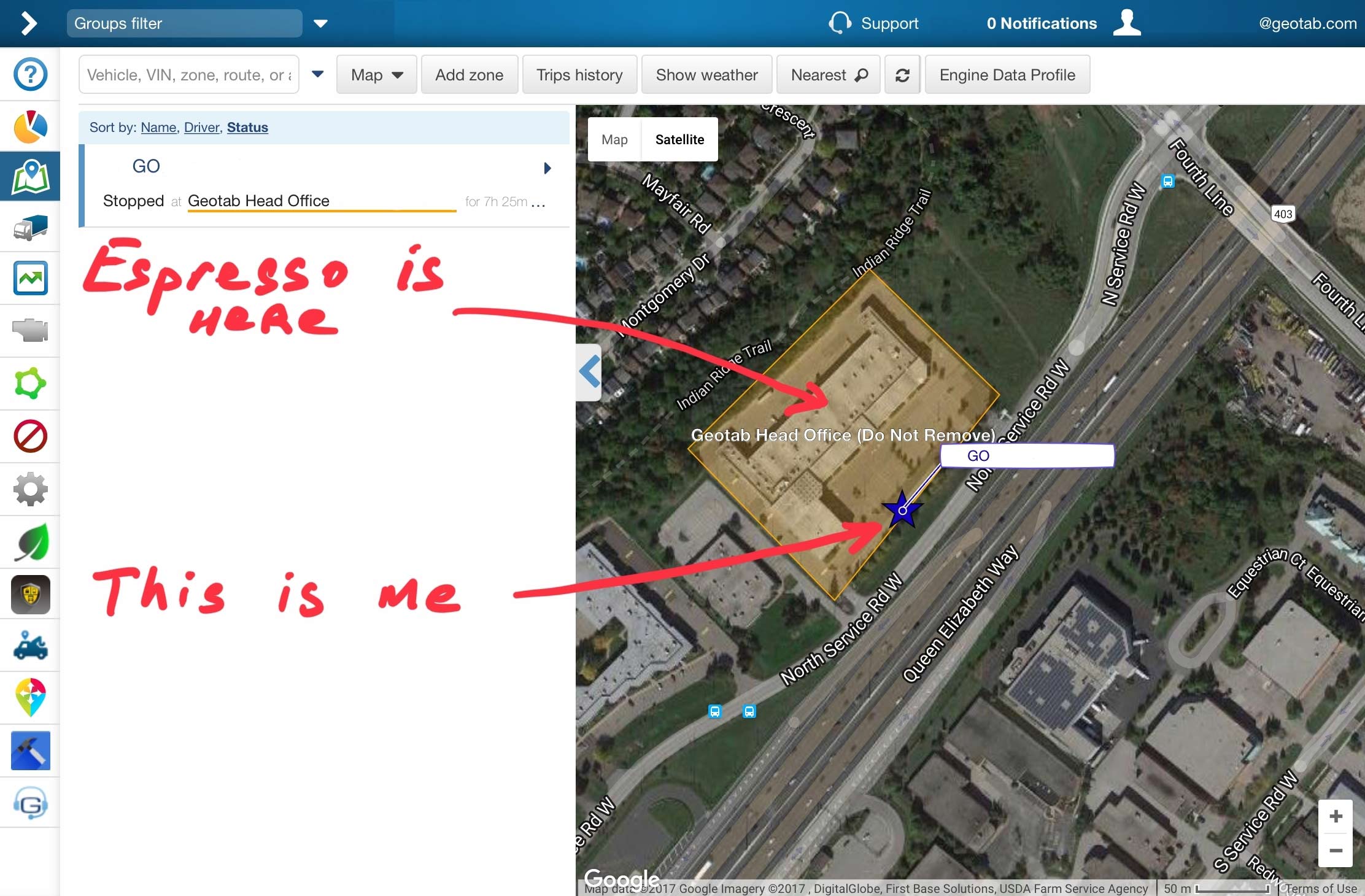

If you ever have the chance to visit our office in Canada (I highly recommend having an espresso while you’re here) and hang out in the departments involved into software development, you’ll probably notice that none of them have testing engineers. There’s no single person in our company whose sole responsibility is testing MyGeotab and other software that we develop. Yet we keep producing one major release per month and our surveys show that customers are happy with the product. How is that possible?

Well, not having dedicated quality assurance (QA) engineers doesn’t mean that we don’t test. In fact, we test more than any team of human-testers could probably do.

Firstly, along with building our software, we’re also the customers, who are always using the cutting-edge version of the product.

Secondly, we run 5 hours of automated testing on every code change we make. And by 5 hours I mean “machine” hours. It would take a week or so and a tank of coffee for a human to do the same amount of work. That’s a lot of tests!

We Are the Customers

The principle we follow is called “eating our own dog food.” Whenever a new employee starts at Geotab, one of the first things they get is their very own personal Geotab GO device. Obviously, as individuals, we don’t use them as a manager of a large fleet would, but it still means that the large share of features are being used daily by people who actually developed them. Personally, I like checking my trips distances and durations, engine diagnostics, fuel consumption and exception events, and if something doesn’t work as expected, I surely know what people to talk to.

Automated Software Testing

The main secret ingredient is automated testing. Whenever a person (or robot, we don’t discriminate) makes a change in any piece of our code and tries to sneak it into the main code repository, we launch our tests. All of them, numbering 5000 individual tests and 5+ hours.

The idea is not new and has been known for decades as continuous integration. But as Sir Arthur C. Clarke once stated in his third law: “Any sufficiently advanced technology is indistinguishable from magic.” We have added plenty of advanced tech into our software, so the whole thing sometimes looks like a magic to me. But before we get into that part, what exactly is an automated test, anyway?

Types of Automated Tests:

The simplest definition I can come up with is the following: automated testing is a piece of program, which gets started by external trigger (e.g. making a change in product’s code and saving it into repository) and then validates assumptions about the product behavior or its components. Let me give you a few examples.

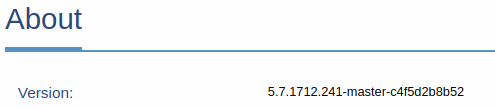

Unit tests — Every MyGeotab release has a version number. For instance, this is the version of our latest cutting-edge build:

Different components of this version are needed by different departments, so it’s important that we include all of them. To make sure that this is always the case we created a test that simply reads the product version, then splits it into components using “-” as separator, confirming that:

- There are exactly three components.

- The first one is numeric version number.

- The second one is a branch name.

- The last one is a checksum.

Because this test targets a small unit of functionality that doesn’t depend on anything else, we call it a unit test.

Integration Tests — Unfortunately, even if all of our tested units are fine, it doesn’t mean that they work well together. For instance, Geotab’s Software Development Kit makes it possible to register a new vehicle via an API call. This involves at least two parties: a web server that accepts the call and the database, which will store the vehicle. We can’t just get away with testing them individually, so we created a test (one of the many) which makes that call, then checks if something ended up in the database, and then tries to make another call to get that vehicle back.

Acceptance Tests — Acceptance tests are probably the most fun part to watch. Unlike integration or unit tests, which usually don’t produce visual side effects, acceptance tests emulate customer actions like opening browser window, clicking buttons, selecting a time range in the date picker control, and so forth. We create those tests for checking features as a whole.

The following video shows testing of the Exception Report filter: show all exception events, show by driver(s), by vehicle(s), for all organization groups, for particular group, etc.

We have more than a thousand such tests, which check every noticeable feature, hundreds of times per day.

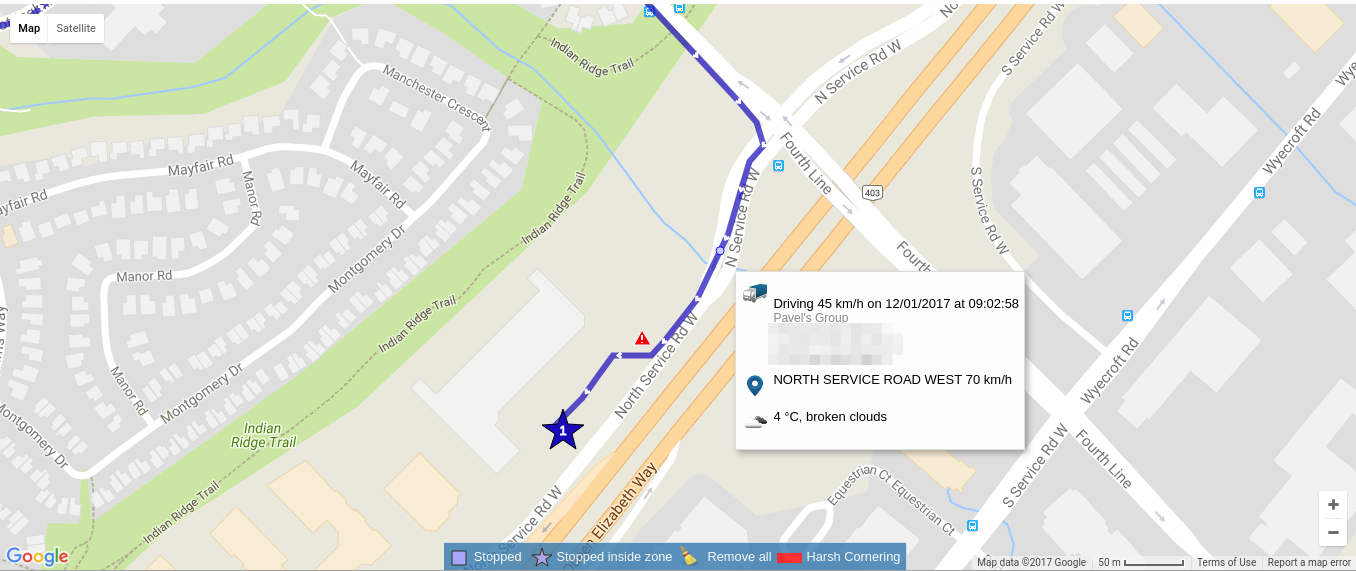

Contract Tests — Contract tests are probably the most bothersome. Being very close to integration tests, they test that we’re still able to use external services like geocoding, speed limit and weather providers, reporting, billing services, and so forth. Since they depend on parties we don’t control, they can fail even if we did nothing wrong. For instance, at least a few times per month certain mapping provider changes street addresses at locations that we’ve chosen for our tests. Obviously, the test will fail.

Just a handful of third-party services that we use: geocoding, speed limit, weather.

Now for the Magic — It’s All About the Cloud

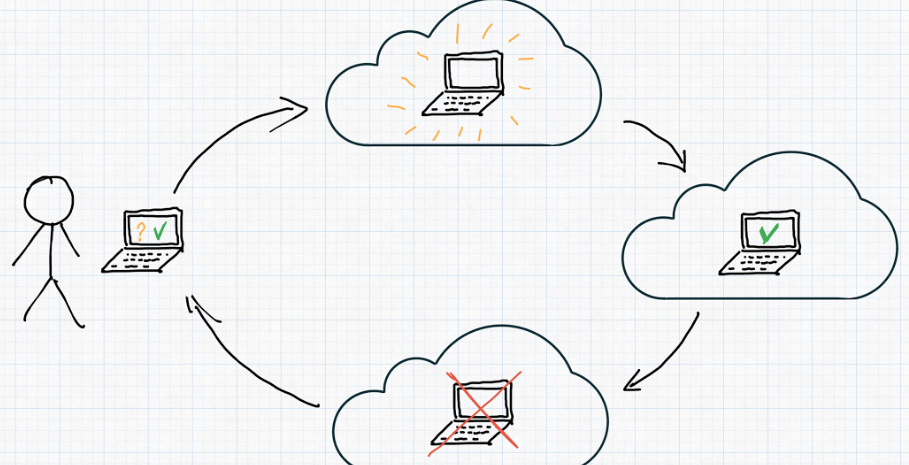

The most interesting part of our automated testing is the infrastructure. Over five hours of testing is too long. By the time the tests finish, the developer will definitely forget what they were about, and in our profession fast feedback is essential.

Another issue is that we need pretty beefy laptops to run those: at least a quad-core CPU with 8-16 GB of RAM and SSD drive. As you could imagine, a team of 25 developers would need 1-2 spare machines for the tests only, which is a lot and frankly, not that convenient. So we made a work-around.

Whenever we need tests to run, we’ll create a virtual machine in the cloud. It all happens automatically and the developer might not even know where test results are coming from.

But that’s still not good enough. We doubled the minimum specs of the testing machines up to 8 core and 24 GB of RAM (you can’t even buy a laptop like that) and instead of creating one machine, we create twelve of them and run tests in parallel. As a result, we have absolutely all MyGeotab tests finished within 23-27 minutes, which is still a lot, but bearable.

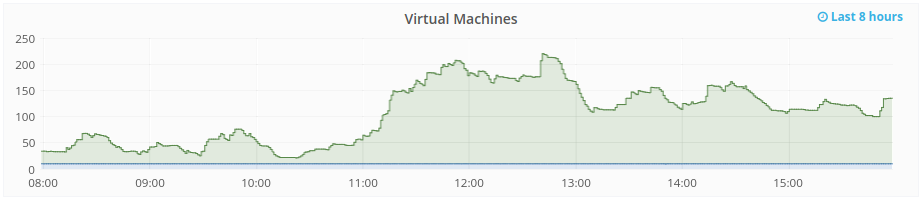

On our office wall, a big dashboard shows how many VMs are running at the moment. Coincidentally, yesterday we broke our own record with 210 virtual machines running tests side by side:

That’s a lot of computing power.

Next Steps

There are still things we can make better. Firstly, the way we distribute tests among the machines is not that effective. We could save money and increase execution speed by implementing fair load balancing for those machines.

Another issue is unreliable tests. Because of the amount and the complexity of our acceptance tests, it’s natural that some of them occasionally fail without a related code change. This creates noise for us and makes the whole test result less trustworthy. If there’s only one failure in a test suite, is that a legitimate problem or just a one-off failure? As for contract tests, they are notorious for false positives. As of now, there is no silver bullet for those. Each test needs to be addressed individually: some can be made more reliable, some can be completely rewritten or even thrown away.

Finally, there’s another type of test that’s critical to software testing: stress testing. As a company dealing with enormous amounts of data, we must be sure that we’re able to handle it. In this scenario, a solution would emulate tens of thousands of active users (with natural behavioral patterns, but “dumb” bots) and vehicles hammering single server.

For more best practices and MyGeotab updates, please subscribe to the Geotab newsletter.

Read More:

How to Use the Geotab Data Feed

Integrating Third-Party Devices in Geotab Platform

Webhooks Tutorial for MyGeotab

Illustration by Pavel Klimenkov.

Subscribe to get industry tips and insights

The Geotab Team write about company news.

Table of Contents

Subscribe to get industry tips and insights

Related posts

The fleet safety incentive program checklist for driver engagement that lasts

June 19, 2025

2 minute read

The impact of unproductive idling on police vehicle service life

June 10, 2025

3 minute read

Multi-stop route planners: A fleet manager's guide + best tools in 2025

June 5, 2025

5 minute read

Commercial truck insurance cost: Rates by state + how to save

June 5, 2025

5 minute read